Automated Crawling of Data with Python

In this tutorial, we'll explore a basic example of web scrapping using Python and the BeautifulSoup library. Our goal is to scrape data from a website, extract the necessary information from the HTML content, and save it to a CSV file for further processing. Whether you're a beginner or looking to refresh your web scraping skills, this guide will walk you through the entire process step by step.

What You'll Learn

How to use the requests library to fetch webpage data.

- Parsing HTML with BeautifulSoup.

- Extracting specific data using CSS selectors.

- Logging output to the console to track progress.

- Saving the scraped data into a CSV file.

- Implementing a page limit to avoid infinite loops.

Prerequisites

Before you begin, ensure you have the following Python packages installed:

- requests

- beautifulsoup4

You can install these packages using pip:

1pip install requests beautifulsoup4The Example Script

Below is the complete Python script that scrapes the data from a target website, logs each row to the console, and writes the final output to a CSV file. In this example, we scrape a colour guide website, extract various details such as the hex value of the colour, the colour code, name, variation, car brand, year, and product codes for spray and touch-up paints.

1import requests2from bs4 import BeautifulSoup3import csv4import time5

6def scrape_motip_colors():7 base_url = "<https://www.motip.com/en-en/colourguide?page=>"8 page = 19 all_data = []10

11 while True:12 if page > 240: # Stop after page 240 to prevent redirection loops13 print(f"Reached the last allowed page: {page-1}. Stopping.")14 break15

16 url = f"{base_url}{page}"17 response = requests.get(url)18 if response.status_code != 200:19 print(f"Failed to retrieve page {page}. Status code: {response.status_code}")20 break21

22 soup = BeautifulSoup(response.text, 'html.parser')23 # Each row with the full set of data is contained in .color-guide-table__row24 rows = soup.select('.color-guide-table__row')25 if not rows:26 print(f"No more rows found. Stopping at page {page}.")27 break28

29 for row in rows:30 try:31 # Extract the hex color value from the style attribute32 bg_span = row.select_one('.color-guide-row__bg')33 hex_value = ""34 if bg_span and bg_span.has_attr('style'):35 # Expecting style like "background-color: #7c0017;"36 style = bg_span['style']37 hex_value = style.split('background-color:')[-1].strip().rstrip(';')38

39 # Extract the Colour code40 code_div = row.select_one('div[data-label="Colour code"] span')41 color_code = code_div.get_text(strip=True) if code_div else ""42

43 # Extract the Colour name44 name_div = row.select_one('div[data-label="Colour name"] span')45 color_name = name_div.get_text(strip=True) if name_div else ""46

47 # Extract Variation48 variation_div = row.select_one('div[data-label="Variation"]')49 variation_text = variation_div.get_text(strip=True) if variation_div else ""50

51 # Extract Car Brand52 brand_div = row.select_one('div[data-label="Car Brand"] span')53 car_brand = brand_div.get_text(strip=True) if brand_div else ""54

55 # Extract Year56 year_div = row.select_one('div[data-label="Year"] span')57 year_text = year_div.get_text(strip=True) if year_div else ""58

59 # Extract Spray (400 ML) product code60 spray_div = row.select_one('div[data-label="site.color_guide.label.spray"] span')61 spray_text = spray_div.get_text(strip=True) if spray_div else ""62

63 # Extract Touch-up (12 ML) product code64 touchup_div = row.select_one('div[data-label="site.color_guide.label.touch_up"] span')65 touch_up_text = touchup_div.get_text(strip=True) if touchup_div else ""66

67 row_data = [hex_value, color_code, color_name, variation_text, car_brand, year_text, spray_text, touch_up_text]68 all_data.append(row_data)69 # Log the scraped data for each row to the console70 print(f"Scraped row on page {page}: {row_data}")71 except Exception as e:72 print(f"Error processing a row on page {page}: {e}")73 continue74

75 print(f"Page {page} scraped successfully.")76 page += 177 time.sleep(1) # Be nice to the server78

79 # Write the scraped data to CSV80 with open('motip_colors.csv', 'w', newline='', encoding='utf-8') as file:81 writer = csv.writer(file)82 writer.writerow(["Hex Value", "Color Code", "Colour Name", "Variation", "Car Brand", "Year", "Spray 400 ML", "Touch-up 12 ML"])83 writer.writerows(all_data)84

85 print("Data saved to motip_colors.csv")86

87if __name__ == "__main__":88 scrape_motip_colors()

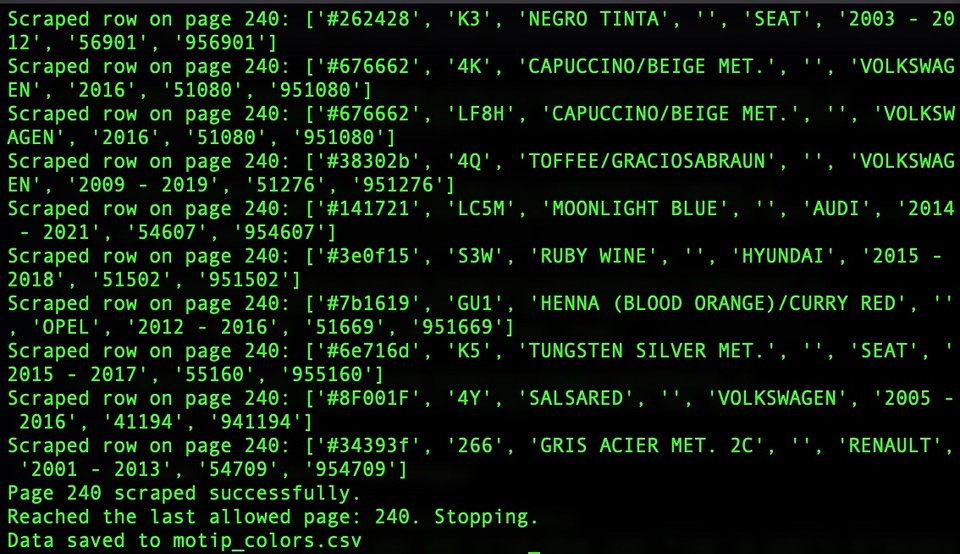

Figure 1: Python web scrapping of data with BeautifulSoup.

Figure 1: Python web scrapping of data with BeautifulSoup.

How the code works?

Initialization

The script starts by setting up the base URL and initializing the page counter and data list.

Looping through pages

A while loop fetches pages until it reaches the 240th page. This limit prevents any redirection issues that might cause an infinite loop.

For each page, a GET request is made using the requests library.

Parsing the HTML

BeautifulSoup parses the returned HTML.

Each row of data is located using the CSS selector .color-guide-table__row.

Extracting data

Specific details such as the background color, colour code, and others are extracted using nested selectors.

The script processes each row, and the scraped information is logged to the console for visibility.

Saving the data

After all pages have been scraped, the data is written to a CSV file (motip_colors.csv), which can be used for further processing or analysis.

Rate limiting

A delay (time.sleep(1)) is included between page requests to avoid overloading the server.

Conclusion

This tutorial demonstrates a fundamental web scraping technique using Python and BeautifulSoup. By understanding each step, from sending requests and parsing HTML to logging data and saving it into a CSV file, beginners can build a solid foundation for more advanced crawling projects. Experiment with the code, and consider expanding it by adding error handling, dynamic user-agent headers, or multi-threading for enhanced performance.

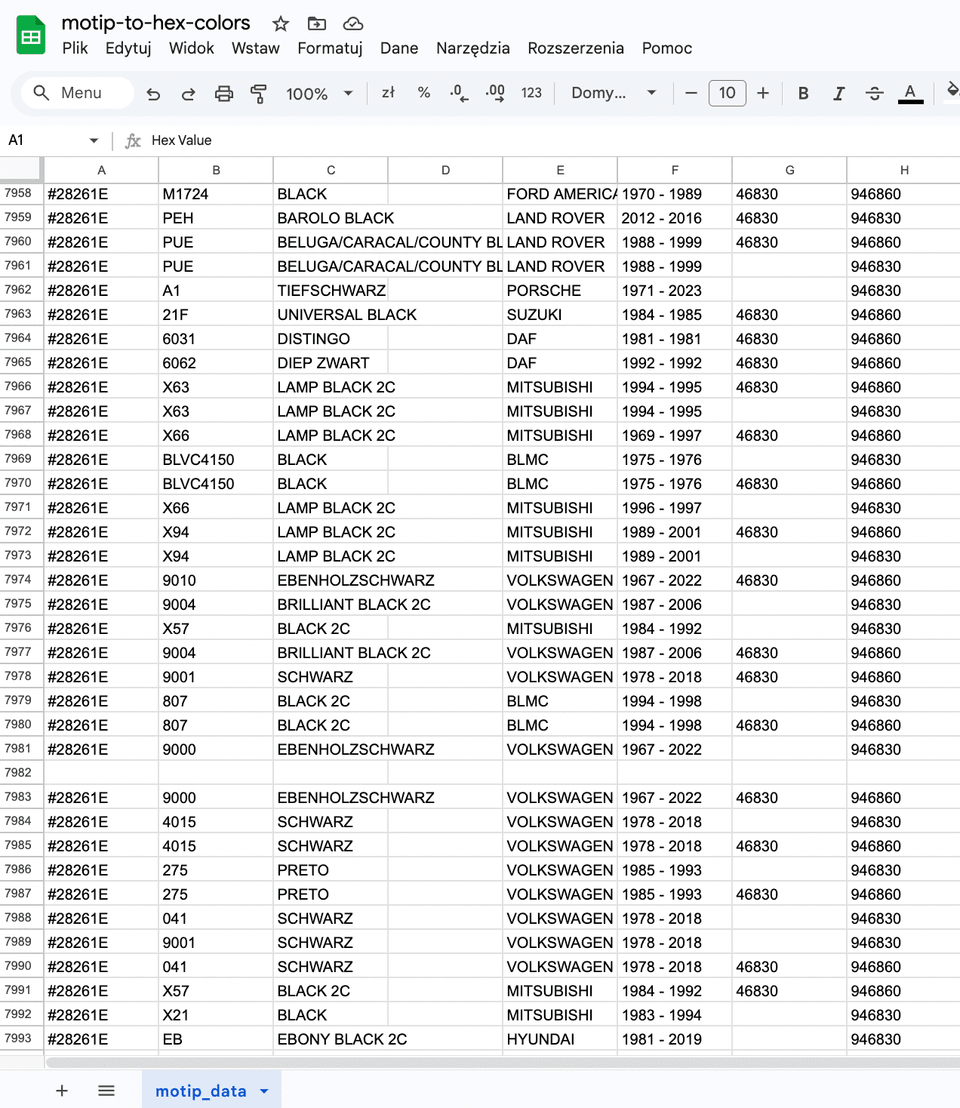

Figure 2: Importing the scrapped data result in CSV into Google Sheets.

Figure 2: Importing the scrapped data result in CSV into Google Sheets.

Happy scraping!

Comments

You must be logged in to comment.

Loading comments...